White Paper

How advanced Data Center Infrastructure Management (DCIM) tools are solving the data center operations management crisis

Date: 18th January 2024

AUTHOR(S): VARIOUS

DATA CENTER INFRASTRUCTURE MANAGEMENT (DCIM)

Executive Summary

This paper advocates for a revolutionary approach to Data Center Infrastructure Management (DCIM), emphasizing the need to move beyond outdated methodologies. Traditional DCIM has failed to effectively manage data center assets, resources, and workflows, often relying on basic tools like MS Excel. This inadequacy calls for a new, data-driven model that transforms extensive data into actionable insights and intelligent analytics,

integrating diverse data sets across various disciplines.

The proposed model transcends mere infrastructure monitoring, focusing on generating valuable management information through sophisticated tools. It also highlights the necessity of a federated platform to manage interdependencies between facility and IT layers, enhancing operational transparency for stakeholders such as clients, governments, and regulators.

The document draws on insights from the RiT Tech Round Table event held in June 2023 where three workgroups explored the challenges and opportunities for advancing DCIM.

Introduction

This paper aims to demystify the reasons that have hindered the widespread adoption and efficacy of data center infrastructure management (DCIM) tools and information. It delves into the core reasons why legacy DCIM solutions have failed to fulfill their intended promise and examines the role of innovative new entrants in the DCIM space, who are redefining the landscape by transforming DCIM tools into proactive and intelligent resources. Through this exploration, the study seeks to uncover actionable insights that can guide the evolution of DCIM into a more effective and valuable asset for data center operations.

Data center operations, much like other industries, are faced with a myriad of challenges, ranging from human resource management, operational management through to environmental, social, and governance (ESG) issues. However, this industry faces a unique and globally significant concern: the ever-increasing demand for power and the concurrent issue of its dwindling availability. This challenge is further compounded by specific operational intricacies such as the use of many different types of cooling systems, the management of high compute environments, the vast range of different types of IT deployments and power densities to be supported and the historic lack of accurate metrics for optimizing data center performance, rather than just relying on the one benchmark of Power Usage Effectiveness (PUE).

Officially documented as accounting for circa 1.5% to possibly 2% of global greenhouse gas emissions, the sector is increasingly concerned about energy efficiency and is facing new directives and legislation in many countries and regions. Pressure is mounting for all data center operators and investors to meet the new nonfinancial reporting directives with deadlines fast approaching for CSRD (first published reports in 2025 beginning January 2024), EED (From mid 2024) and other ESG obligations around the world.

This paper explores how the lack of operational data transparency, where hardware providers work to their own rules, keep closed their proprietary protocols or simply that management systems are run manually with limited use of the data already available, results in an industry ill prepared to effectively manage complex data center estates.

The question is - can automation through advanced DCIM tools transform this challenge into an opportunity for the industry?

Following a Round Table strategy day on 15th June 2023, this paper has been written to address three critical factors for data center operators:

Chapter 1: Operational Data vs Useful Information

The Challenges Faced by DC Operators

Chapter 2: Compliance Obligations

Solving the Mystery on Industry-based standards Reporting

Chapter 3: Operational Efficiency

Day to Day Management and Challenges of the DC

Universal Intelligent Infrastructure Management Explained

When RiT Tech introduced the idea of a Universal Intelligent Infrastructure Management (UIIM) framework three years ago, its vision was to address the importance of creating a unified data set that could coherently monitor, manage, and verify all the components that affected the performance of a data center – the IT stack, Physical Environment, Data Center Operations, and the integration of Software Systems such as BMS, EMS, PMS, CMMS and CRM, for example.

It is the purpose of this paper to define what advanced DCIM can look like, and how through the methodology of Universal Intelligent Infrastructure Management, the industry can achieve its transparency goals.

For DCIM to work, no one tool can deliver what is required by the industry. To have a unified data set will require industry collaboration and multiple integrations of disparate asset classes, proprietary tools and data sets, with the collation and analysis of ongoing performance data, requiring collaboration between every element within the data center ecosystem.

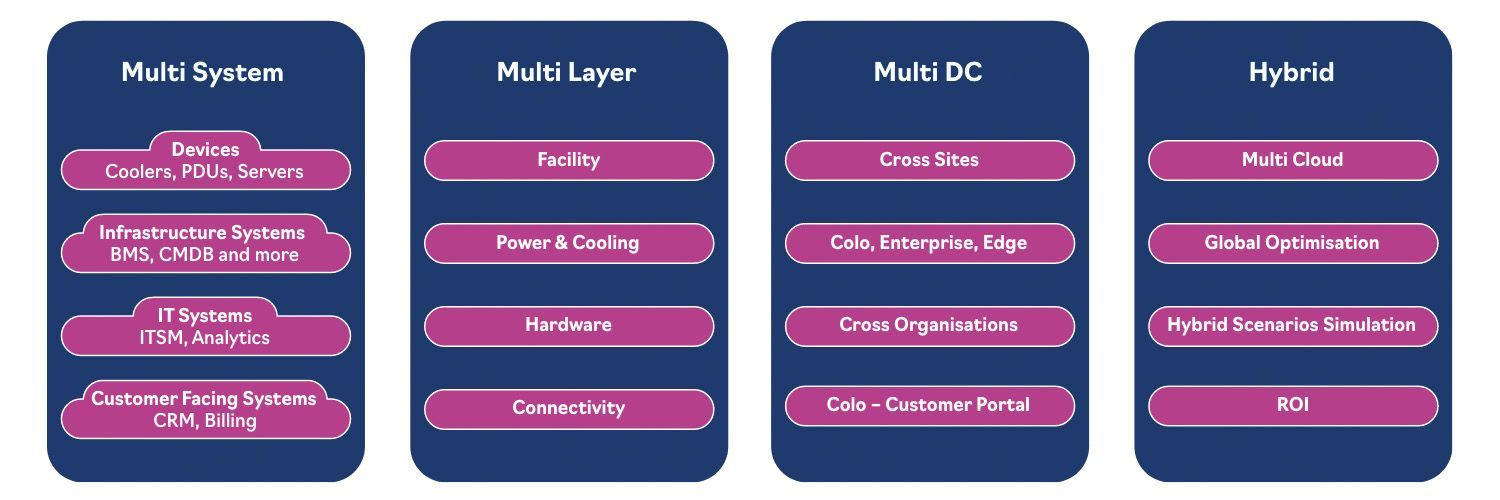

UIIM is an evolution of DCIM aimed at empowering I&O leaders by offering ‘more universal’ and ‘more intelligent’ management information. In summary:

- With a single unified data set, UIIM addresses planning, implementation, management, and operational control of the data center environment.

- It considers the entire stack of infrastructure layers and their interdependencies, including environment and facility, IT hardware, power, and network connectivity, as well as software services.

- It applies to all data center environments, including colocation, enterprise data centers, edge and dark sites, public and private cloud, and hybrid deployments.

- It aims to optimize resource utilization, including energy, BOM, people, SLAs, operational costs, and sustainability reporting.

The Challenge

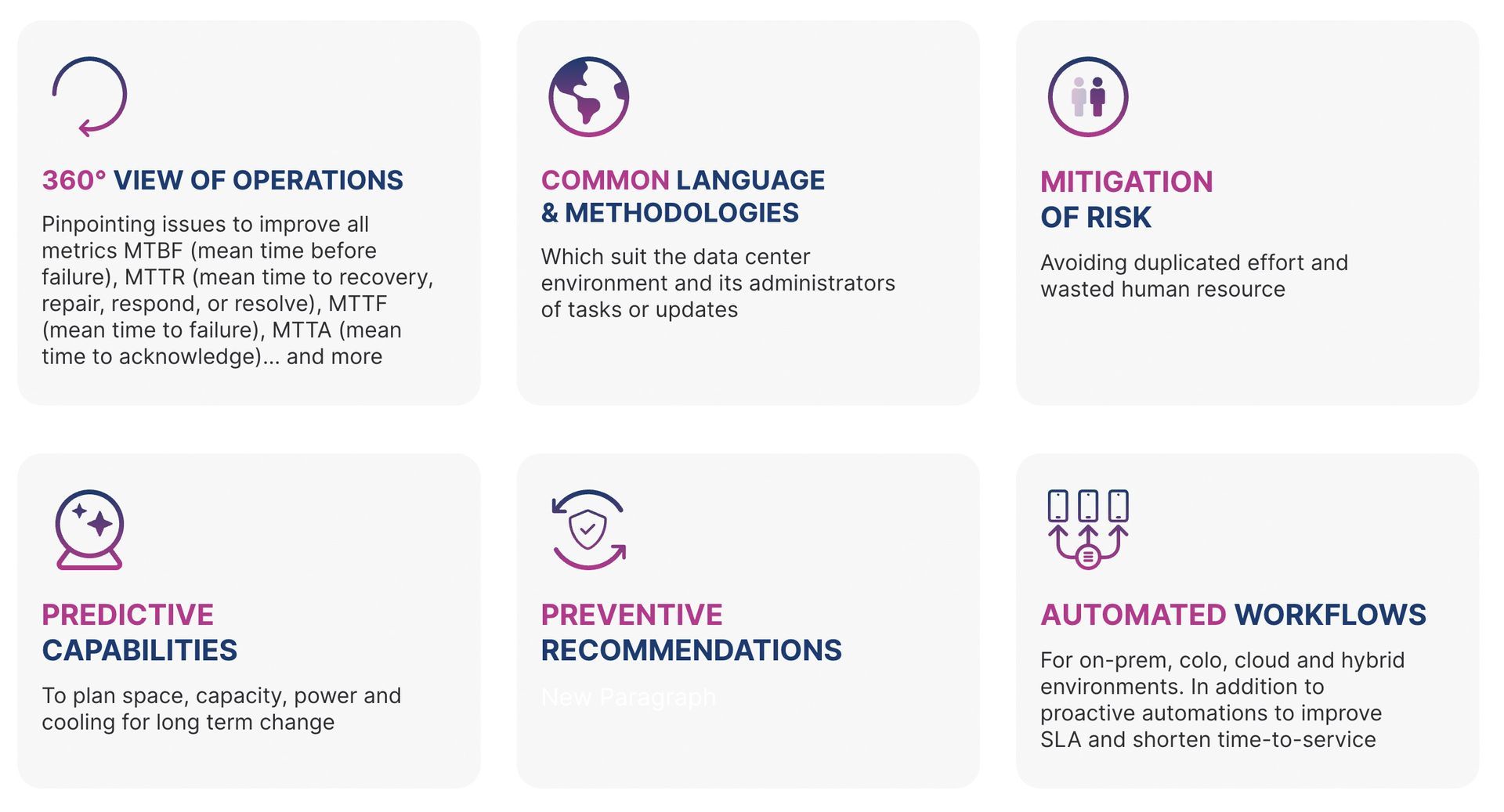

When it comes to DCIM, no one-size-fits-all can serve the diverse needs of operators. Through UIIM, there are new capabilities to integrate with all physical devices and software systems, ultimately powered by automation and AI tools. As users move to more bespoke tools, the AI function will require specific training on the operator’s protocols, and with deep analytics. With this level of capability, it will take infrastructure management to a new level, providing actionable insights and automating business processes to boost operational efficiency.

Why Now?

There are numerous reasons for repositioning DCIM but chief among them is that data centers have never had a solution that delivered on the promise of effectively managing data center assets, resources, and operational workflows - ‘the single source of truth’.

As a sector we need to move on to a more sophisticated data driven model. One with clear outputs and definitions and a better understanding of the management features and functionalities for a system capable of managing data center infrastructure across the multiple silos and disciplines involved. Added to this is the need for the available data from several sources to be coordinated across a distributed estate and subject to intelligent analytics to turn the individual data sets into useful management information.

It is no longer sufficient to simply ‘monitor’ infrastructure. The industry needs to turn the lakes of collected data into useful information and actionable insights. Furthermore, such insights should be provided as detailed step-by-step recommendations and even potentially proactively implemented in process automation. Legacy DCIM solutions have not been able to deliver these capabilities or deliver a demonstrable ROI - evidenced by the fact that MS Excel remains the most widely used ‘DCIM’ tool. It is time to consign the old concept of DCIM and recognize that it should be consigned to the same fate as VHS in the streaming era.

The need for a federated platform capable of managing the interdependencies between the facility and IT layers of the infrastructure is now critical to enable data center operators to provide operational transparency to clients, governments, ESG regulators, third parties, sub-contractors, remote management with smart hands services and many more at the touch of a button without additional human resource overhead.

In the pages to follow we confirm the findings of the RiT Tech Round Table event in June 2023 and the three workgroups detailed in Chapters 1 – 3.

Round Table Findings 15th June 2023 – Executive Summary

Over 20 companies attended DCIM Round Table in June 2023. Made up of operators, MSPs, professional services and hardware suppliers, the session confirmed six key issues:

KEY ISSUE 1: Comprehensive functionality

All three working parties collaboratively agreed on the need to address the unique needs of operators where they can tailor a solution to their own estates and protocols. Savvy data center operators want far more than just monitoring They are looking for an increasingly comprehensive combination of asset management, connectivity management, power and energy management, network connectivity, work orders, task and reporting capabilities, robust capacity planning, and vigilant alerting, and so much more. Added to this, they are looking for seamless integrations with their own systems, e-platforms and data-driven insights that can enhance decision-making.

KEY ISSUE 2: Sustainability

Now that ESG has taken center stage for governments, organizations are increasingly recognizing the true potential of DCIM in helping tomanage and report key metrics such as power and energy consumption, along with other environmental impacts that align with their green and sustainability obligations. Critical to this, is the diversity of data needed to effectively report on legislation such as CSRD.

KEY ISSUE 3: AI-powered solutions

The transformative potential of artificial intelligence in data center infrastructure management has captured the attention of customers. AI powered DCIM capabilities are being introduced to offer task automation, predictive planning, problem identification or optimization opportunities in a proactive manner. But this is only the beginning. DCIM tools must offer efficiencies through automation and AI is one route.

KEY ISSUE 4: Customisation and modularity

Due to the unique needs of operators, organizations will only opt for DCIM solutions that adapt to their needs. DCIM users value modularity, the freedom to add features and choose tools appropriate to their business as needed, all coupled with the flexibility to manage projects in phases. Whether starting small and scaling up or enhancing functionalities gradually, providers must cater to specific and nuanced requirements. No longer is it satisfactory to buy a product and not have someone on hand to make the changes needed during implementation or adapt the product responsively.

KEY ISSUE 5: Vendor knowledge and collaboration

In a rapidly evolving landscape of technology enhancement, customers need DCIM providers that can move quickly. They’re looking for vendors with a deep knowledge of complex data center environments, ones that will also simplify implementation and management, and make the customer experience as easy and intuitive as possible. Systems that are not open or that use proprietary protocols, that prioritize or benefit integration only between systems from the same manufacturer, are increasingly being rejected by customers, due to the lack of flexibility and integration/management capabilities, whether hardware or software. For DCIM to be successful manufacturers and DCIM software providers must collaborate under an agreed set of industry standards. This is deemed to be the single most important block to wider adoption.

KEY ISSUE 6: Scalability and security

Scalability and security are paramount concerns in today’s data-centric world. Customers expect DCIM solutions to scale alongside their expanding operations, and they demand robust security measures to safeguard their valuable data. DCIM tools must be fully compliant with data protection and security regulations in traditional networks but also in private and hybrid cloud environments, by using the latest up-to-date security standards.

Chapter 1

Management Information: The Challenges Faced by Data Center Operators

RFP Requirements Example 1

Customization and modularity: Organisations will only opt for DCIM solutions that adapt to their unique needs. They value modularity, the freedom to add features as needed, and the flexibility to manage projects in phases. Whether starting small and scaling up or enhancing functionalities gradually, providers must cater to specific and nuanced requirements. No longer is it satisfactory to buy a product and not have someone on hand to make the changes needed or adapt the product responsively.

Overview

Management Information: The Challenges Faced by Data Center Operators

To achieve the ambitious goals of many governments, such as improving energy and operational efficiency, unhindered access to all operational data available data in a data center is vital in the creation of a single federated data lake.

As responsible software developers, taking the lead on engaging with all key stakeholders in the data center eco system is crucial, from hardware manufacturers to single vendor systems that run BMS or environmental monitoring. In essence, integration with as many propriety protocols as possible will create a coherent set of data that becomes invaluable for the operator.

The data center infrastructure management industry is poised for a series of dramatic and transformative changes. These changes which are driven by technological advancements and evolving market demands, signal a new era in how we manage and operate data centers.

In this chapter, we explore key movements from the advent of unified data platforms to the emphasis on sustainability and how universal interoperability, advanced management tools, AI-driven efficiency, and sustainability management are not just buzz words and trends, but essential pathways towards a more connected, efficient, and environmentally responsible digital infrastructure.

Unified Data Platforms: The future of DCIM hinges on the integration of disparate data sources into a unified platform. These platforms will not only consolidate data from various devices and IT infrastructure but also feature sophisticated tools for data validation, anomaly detection, and analytics to handle large volumes of data and offer real-time insights.

Advanced Management and Planning Tools: The introduction of tools that incorporate AI for predictive maintenance and anomaly detection, as well as digital twins for strategic planning, will transition DCIM to a more proactive model. This approach will improve operational efficiency by predicting equipment issues, monitoring anomalies, and simulating physical data centers.

AI and Automation-Driven Efficiency: AI and automation are predicted to overhaul data center operations by automating complex tasks like resource planning. The integration of AI will streamline the management of cooling, power, and networking operations, enhancing asset lifecycle management and overall operational efficiency.

Sustainability Management Tools:

Sustainability is becoming a pivotal aspect of DCIM, with tools emerging to manage everything from carbon footprint tracking to compliance with environmental regulations. These tools will facilitate the analysis of energy consumption patterns, the adoption of renewable energy sources, water optimization, efficient IT stack usage, energy reuse, waste reduction, and the implementation of eco-friendly practices.

Challenges

As we look to the future of data center infrastructure management, advancements in technology will revolutionize not just the industry, but also the roles of those at its helm. The challenge for the data center industry in meeting operational and compliance obligations is to heavily invest in research and development, upskill the workforce, and create innovative management practices that can handle the demands of future data center operations.

Leadership Transformation: As AI and automation take over routine tasks, data center leaders and managers must adapt to a radically transformed role. The challenge lies in developing the skills and strategies needed for effective strategic oversight, where the focus is on leveraging predictive analytics and automation to maintain high efficiency and minimize downtime.

Challenge of Developing Unified Platforms: The intensifying demand for AI and LLMs will require the creation of sophisticated unified platforms capable of supporting heavy computational loads and extensive data generation. The challenge is to engineer systems that can aggregate and analyze data efficiently while also adhering to energy efficiency and sustainability standards.

Achieving Interoperability: The increasing complexity and geographical dispersion of data centers present the challenge of ensuring interoperability and scalability. The integration of diverse environments and the incorporation of new technologies, such as edge computing and liquid cooling, must be seamless, especially in colocation environments, to maintain competitive operational efficiency.

Advanced Tool Development: With the growth of infrastructure and a push for higher operational efficiency, there is a challenge in developing tools that can manage the complexity of modern, dispersed data center environments. These tools must provide consistent power and defend against various threats, adapting to the needs of geo-distributed data centers and edge.

Managing Complexities through AI and Automation: The surge in data and computational demands leads to the challenge of managing large-scale data centers efficiently. AI and automation are critical in addressing these complexities, but their integration poses its own set of challenges, including ensuring smooth operations, optimal resource allocation, and the incorporation of innovative cooling and renewable energy sources.

Challenge of Environmental Responsibility and Compliance:

The increasing focus on environmental responsibility and the impact of regulations like the CSRD and EED bring forth the challenge of meeting high standards for sustainability. Data centers must develop advanced tools for managing and improving sustainability metrics to ensure compliance with environmental regulations and stakeholder expectations.

Outcomes of Workshop One

Workshop 1: Operational Data Information: The Challenges Faced by DC Operators

The group highlighted six potential opportunities for change:

Harnessing the Potential of AI: AI will play a pivotal role in extracting insights from large data sets but in the data center there is one major hurdle - the data is either locked down within the manufacturer’s assets in the form of proprietary protocols or there isn’t a way of collecting the data at all. In addition to the multiple layers within the data center, a substantial portion of data center operations relies on mechanical and electrical systems, the absence of a collaborative approach to extract relevant information from hardware impedes progress for data center infrastructure management (DCIM). To drive meaningful change, it is essential to involve a broader spectrum of stakeholders, including manufacturers, software providers, and management tool providers. Without their collaboration, we risk remaining trapped in a stagnant state, hindering advancements for years to come.

Large Volumes of Data and Working Towards Aligned Goals:

By embracing a shared vision, like working towards achieving net-zero emissions, organizations can synchronize their efforts and move towards a realistic outcome. We need to establish a sense of collective responsibility and promote cross-functional cooperation, and ultimately as already noted, this ambitious endeavour requires the active participation of all stakeholders, from manufacturers to MSPs, operators and software developers and even advocates of the circular economy, collectively leveraging data to generate useful management information.

By embracing the concept of a digital twin, data can be presented in a manner that enables users to make faster and well-informed decisions.

Standardized Data Exchange Protocols: Adopting a standardized approach raises the question of adopting open-source models to foster collaboration and ensure everyone is working towards a common goal. A standardized approach and shared direction can significantly enhance decision-making and generate better outcomes. This way we can put in place scenario testing and planning to allow organisations to evaluate different outcomes within their own estates and benchmark them against available industry data.

Tailored Dashboards for the Different Stakeholders: Every stakeholder involved in the decision-making process must have access to a customized dashboard. These bespoke dashboards can provide relevant information, patterns, and cycles of interrogation, enabling stakeholders to make informed decisions aligned with their specific roles and responsibilities. Not all

stakeholders have the same need and without tailored dashboards, the tool becomes unusable or deemed useless.

The Role of Data Visualization: Data visualization plays a crucial role in facilitating understanding and empowering the data center collective across the different operational and IT disciplines. Without accurate data sets and visualization of this data, it can create dangerous perceptions of reality, resulting in missing significant capacity or resource management opportunities. By embracing the concept of a digital twin, data can be presented in a manner that enables users to make faster and well-informed decisions. This approach also breaks down the barriers between intricate data sets and decision-makers. By embracing data visualization, we can gain a comprehensive understanding of the bigger picture, unlocking new possibilities for advancement.

Continuous Improvement and Feedback:

Regular stock-taking, measurement, review, and feedback are crucial for driving continuous improvement. Using existing global standards and platforms for feedback facilitates the ongoing refinement of data practices and ensures that organizations can adapt to changing needs. Additionally, undertaking a cost-benefit analysis (CBA) helps prioritize proposed actions and determine the most cost-effective measures. All of this can be supported with one federated system.

Chapter 2

Compliance Obligations: Solving the Challenge of Industry-Wide Standardized Reporting

Mark Acton, Data Center Consultant

Overview

Challenges

Outcomes

RFP Requirements Example 2

Sustainability: Now that sustainability has taken center stage, recent RFPs have become more sophisticated in their requirements. Organizations increasingly recognize the true potential of DCIM in helping to reduce power losses and in simplifying energy consumption analysis to align with their green and sustainability initiatives. Critical to this aspect is the reporting for legislation such as CSRD.

Overview

Compliance Obligations: Solving the Mystery on Industry Based Standards Reporting

In this section of the paper, we explore the importance of adhering to the nine KPIs within the international standard ISO30134:2018 Information Technology standard:

- ISO/IEC 30134-2:2018 - Part 2: Power usage effectiveness (PUE)

- ISO/IEC 30134-3:2018 - Part 3: Renewable energy factor (REF)

- ISO/IEC 30134-4:2017 - Part 4: IT Equipment

- Energy Efficiency for servers (ITEEsv)

- ISO/IEC 30134-5:2017 - Part 5: IT Equipment

- Utilization for servers (ITEUsv)

- ISO/IEC 30134-6:2021 - Part 6: Energy Reuse Factor (ERF)

- ISO/IEC 30134-7:2023 - Part 7: Cooling Efficiency Ratio (CER)

- ISO/IEC 30134-8:2022 - Part 8: Carbon Usage Effectiveness (CUE)

- ISO/IEC 30134-9:2022 - Part 9: Water Usage Effectiveness (WUE)

In the Round Table session, it was clear that there is a conflicting and confusing understanding of what is required to meet these KPIs. Add to this the burden of European Energy Efficiency Directive (EED), EU Taxonomy Climate Delegated Act (TCDA) and Corporate Sustainability Reporting Directive (CSRD) an update to the existing Non Financial Reporting Directive (NFRD), there is a real challenge for operators who are left to deal with both creating the strategies and meeting reporting requirements.

Industry-wide, there’s an acknowledgment that there is an overwhelming volume of dynamic data, making it challenging for manual management by operators, especially when it comes to meeting the new non-financial

reporting directives. Digitized technology to create actionable insights and transparency has now become the single most critical factor for the industry. There’s a real need for access to a unified data set which is accurate, automated, coherent, and managed responsibly and actively.

Data centers are extremely complex environments with multiple stakeholders across the entire data center ecosystem. Operated without data driven management insights, these complex sites will inevitably be highly inefficient, especially when every infrastructure layer within the data center is siloed and opaque - this must change.

Defining responsibilities and using appropriate KPIs from an ESG perspective is extremely complex. It is well known that data center operators and customers do not have a joined-up approach when it comes to collating and sharing data to meet reporting requirements and regulations required by regional governments and individual countries. As evidence the CNDCP is dead in the water.

For many years, operators of different scales have managed to greenwash due to lack of transparency in reporting. From the start of 2024 CSRD is in effect, but it is striking that many organisations are not yet responding to it.

Challenges

There is little reliable data when it comes to understanding the impact of data centers on the environment. The impact of not having accurate and auditable data in relation to data center operations may even result in difficulty in obtaining

development permission and potentially increased taxation.

ESG reporting is seen as good practice and a part of corporate responsibility. However, there is a need for legislative backing to ensure guidance as well as compliance. The challenge lies not in the legislation itself but in getting large companies to act responsibly and honestly. There’s a lack of understanding of real ESG issues, inconsistent data reporting, and approaches from some companies that are more focused on greenwashing than real improvements.

CHALLENGE 1 - Sustainability of Cloud Computing

Contrary to popular belief, cloud computing is not inherently more sustainable. There’s a misconception about its real efficiency. Large institutions and platform providers are not effectively measuring sustainability. This may be due to perceived lack of time or resources or simply wanting to hide the truth. Some companies use sustainability claims as a marketing tool, with questionable practices like claiming net-zero goals through offsets. In this respect ESG is being ‘Weaponised’ by the large platform providers.

CHALLENGE 2 - Creating Effective IT Data Sets for Sustainability

There’s a need for standardized net-zero transition plans with relevant targets and KPIs. The main issue is the inconsistency in reporting, data usage and understanding. The involvement of procurement and standardization of data collection and reporting are essential.

CHALLENGE 3 - Resource Management and Financial Considerations

Service companies are well aware of financial benefits of the ESG reporting requirements being imposed, with firms like PWC and EY expecting significant revenue uplift. Challenges for data center operators include increased costs of the human resources required for data analysis, the need for dedicated ESG departments, and a lack of understanding of the cost implications of sustainability decisions. There’s a general resistance to change, especially regarding asset management, lifecycle replacement and depreciation practices.

CHALLENGE 4 - Need for Standardization in Sustainability Reporting

The bottleneck in sustainability is often not in computing power but in network efficiency. Standardization in reporting and benchmarking is essential for meaningful improvement. There is also a risk of double counting and inaccurate reporting due to the lack of standardized approaches.

CHALLENGE 5 - Auditing and Consequences of Non-Conformance

There’s currently no specific organization for auditing these new ESG regulations, which raises concerns about accountability. The reputational impact and the preferences of the next generation of employees are becoming significant factors in corporate decision-making. There’s a need for a system (like a league table) to effectively compare and monitor company performance in sustainability.

Outcomes of the workshop

Workshop Two: Compliance Obligations - Solving the Mystery on Industry-based standards Reporting

This working party identified the following opportunities for improvement:

Investment Confidence and Data Coherence: There’s a growing concern among funders due to the negative press surrounding the asset class. A significant challenge is the absence of comprehensive data that can adequately assess the sector’s status in terms of compliance, environmental obligations, and shareholder ROI. The lack of coherent data hinders accurate investment decisions and undermines confidence in the sector.

Data Transparency and Accessibility: The inaccessibility and the lack of transparency of crucial data can lead to substantial fines and increased taxes. This is exacerbated by the need for proprietary tools to access data from certain equipment, with some manufacturers restricting data access. There is an abundance of operational data but the barriers to accessing and utilizing this data results in the inability to access or read important data, impeding on the potential of optimizing data center operations and the full utilization of management information.

Sustainability Metrics and Sector-Specific Standards: While there are existing sustainability metrics set by governments, there’s a call for more sector-specific standards and metrics. The only way to transform these metrics into actionable insights for better sector performance is collaboration across all vendors.

The Role of Artificial Intelligence: AI is recognized as a key player in managing and interpreting data, which will add value and enhance customer satisfaction for data center operators. However, this is some way off and there is a more immediate issue on reporting that can only be resolved through creating a unified data set.

“People talk about a lack of operational data but it’s simply not true,”

Says Mark Acton, Business Strategy and Technology Director.

“There is a huge volume of potentially useful operational data, but the fact is we cannot get access to this vast pool of data or, in some instances, it becomes far too complicated and expensive a task to extract information from data center assets that are using proprietary protocols.”

Chapter 3

Operational efficiency: day to day management and challenges of the DC

Antonio Garcia-Suarez, Global Product Manager

Overview

Challenges

Outcomes

Overview

Operational Efficiency: Day to Day Management and Challenges of the Data Center

Data centers are like dynamic, living, breathing beasts—and it takes a village to tame them. The modern data center ecosystem has the manpower to do so, but the secrecy of data is one of the main blockers! Managing a data center encompasses a collaborative network of entities:

- The owner who holds the facility’s reins.

- The operator entrusted with its upkeep.

- The diverse tenants ranging from colocation clients to global enterprises with extensive data infrastructure.

However, this village-like harmony is seldom the reality in today’s landscape, which is characterized by disjointed practices and siloed efforts. Beyond being wholly inefficient, this way of working also puts data centers at odds with the newest legislative changes.

Challenges

Maximizing efficiency and accurately reporting on sustainability metrics is impossible in a fragmented environment where collaboration is lacking. To meet the challenges, harmonization is vital. The data center must transform from a patchwork of individual silos into a connected, unified ecosystem.

The longstanding disconnection between data center departments and their individual goals has led to a fragmented approach to resource utilization, where each unit is focused on serving its own agenda without a common language to communicate its plans. For example, should IT make a change to its infrastructure, this could have ramifications across various aspects of the facility, from power distribution to cooling systems, with knock-on effects for operators and tenants.

Despite these risks, in most data centers, there isn’t the information available to visualize the potential ripple effects of such adjustments without manually intensive data sanitization. Moreover, with poor communication between departments, there is a sense of every man out for themselves.

The result? A cacophony of voices, each speaking its own language and pursuing its separate agenda to the detriment of efficient energy utilization. Maximizing efficiency and accurately reporting on sustainability metrics is impossible in a fragmented environment where collaboration is lacking. To meet the challenges, harmonization is vital. The data center must transform from a patchwork of individual silos into a connected, unified ecosystem.

The longstanding disconnection between data center departments and their individual goals has led to a fragmented approach to resource utilization, where each unit is focused on serving its own agenda without a common language to communicate its plans. For example, should IT make a change to its infrastructure, this could have ramifications across various aspects of the facility, from power distribution to cooling systems, with knock-on effects for operators and tenants.

Despite these risks, in most data centers, there isn’t the information available to visualize the potential ripple effects of such adjustments without manually intensive data sanitization. Moreover, with poor communication between departments, there is a sense of every man out for themselves.

The result? A cacophony of voices, each speaking its own language and pursuing its separate agenda to the detriment of efficient energy utilization.

What is the short-term fix

Clearly, there is a pressing requirement for a shared platform that bridges these silos, creating a single source of truth that extends to include gray space, white space, IT, networking, storage, cloud, and connectivity—the idea being to create a unified view, irrespective of the departmental lens.

Going back to the example of IT needing to make a change, imagine if there was a straightforward and fast way to visualize its ripple effects, communicate the change effectively, and automate the subsequent workflows and ticketing systems. In essence, what if we could create a common language between departments?

This tool would empower every stakeholder to understand the cause and effect of changes in real-time across cooling, power, space, and capacity—a platform that gives you a bird’s-eye view of your data center’s intricacies, drawing insights from digital twins and a comprehensive data lake that aggregates information from diverse toolsets and departments.

Outcomes of the Workshop

Workshop 3: Operational Efficiency

Day to Day Management and challenges of the DC

Siloed Practices: What is damaging to advancing infrastructure management is the fact that data center functions and departments operate with unique practices, procedures, concerns, needs, and policies. There needs to be a common language and benchmarks that IT and facilities can collaborate on. There’s a critical need for bridging gaps between silos, fostering a unified language, and understanding how integrating interdependencies can enable transformation.

Data Management and Confidence Issues: Data sanitization is irregular and siloed, with unclear ownership and responsibility. The lack of confidence in data affects operations, leading to underutilization of helpful tools such as DCIM. It is important to ensure there is an accurate collation of useful data that can be shared across all teams.

Collaborative Tools Will Bridge the Gap: A platform that integrates siloed information and automates tasks is essential. Cross-disciplinary communication that encourages discussion across multiple disciplines will build confidence in the data.

Educational Initiatives: It is important that the industry addresses the need for education in managing and understanding how industry standards impact their operations and the data required. Recognizing that each data center has unique needs and cannot follow a one-size-fits-all approach is crucial.

Conclusion

In conclusion, the DCIM Round Table of June 2023, attended by over 20 diverse companies including operators, MSPs, professional services, and hardware suppliers, was a pivotal gathering in the realm of data center infrastructure management. This assembly not only highlighted the evolving demands and expectations within the industry but also set a clear direction for future development and innovation.

The key issues identified included the need for comprehensive functionality, industry-wide collaboration, advanced technology (e.g., AI-powered solutions), customization and modularity, vendor knowledge and support, and scalability and security. These factors underscore the necessity for a holistic approach to addressing the challenges and opportunities facing the sector.

These themes highlight a transition towards more intelligent, flexible, and sustainable DCIM practices, emphasizing the need for solutions that are not only technologically advanced but also environmentally conscious and customer-centric.

From the collaborative push for comprehensive and customizable DCIM tools that seamlessly integrate with existing systems and offer extensive functionalities, to the recognition of the transformative potential of AI and the importance of vendor expertise and support, the Round Table has laid down a blueprint for the future. The emphasis on sustainability, reflecting a broader global trend towards environmentally responsible practices, places DCIM at the forefront of green innovation in the tech industry. Furthermore, the focus on scalability and robust security measures aligns with the growing need for data protection in an increasingly digitized world.

As the DCIM landscape continues to evolve, the insights garnered from this event will undoubtedly play a crucial role in shaping the future of data center management. The collaboration and foresight demonstrated by the participants promise not only enhanced efficiency and functionality in the short term but also a sustainable, secure, and customer-focused approach to DCIM in the years to come.

About the authors

Mark Acton

Business Strategy & Technology Director, RiT Tech

Mark Acton is a seasoned expert with over 25 years in data center operations, renowned for ensuring 24x365 availability in world-class facilities. His specialization in delivering business-critical services is matched by a respected leadership role in the data centers sector. Mark’s career is distinguished by his international experience and a robust skill set that spans data center facilities design, and the combined management of IT and operational facilities.

Known for his technical acumen in consulting, Mark excels in integrating sophisticated design with pragmatic management, setting new benchmarks in operational excellence. His global perspective enriches his approach, making him a versatile and insightful leader in the field.

Mark’s contributions have consistently advanced the standards of data center operations, earning him recognition as a visionary in the industry. His dedication and innovative strategies continue to inspire and shape the future of data center management.

Jeff Safovich

CTO, RiT Tech

Jeff brings over 25 years of substantial experience in the technology sector, distinguished by significant contributions to tech product development and innovation. His entrepreneurial journey includes the co-founding of three tech start-ups, notably SphereUp, which achieved successful acquisition, and Zoomd, which notably went public in 2019. His tenure at Comverse, where he led a development group, further exemplifies his expertise in technology leadership and development.

Jeff has been instrumental in revolutionizing Data Center Infrastructure Management (DCIM). His pioneering introduction of the Universal Intelligent Infrastructure Management (UIIM) concept has marked a significant advancement in the industry. This innovation, under Jeff’s guidance, has redefined the management of data center infrastructures, setting new standards for efficiency and intelligence in operations.

Jeff’s profound understanding of technology, coupled with his strategic leadership, has not only propelled RiT Tech to new heights but has also significantly influenced the evolution of data center management practices. His contributions to the field reflect a deep commitment to excellence and innovation in the data center industry.

Antonio Garcia

Global Product Manager, RiT Tech

Antonio Garcia, an eminent XpedITe Global Product Manager at RiT Tech, is a distinguished expert in Data Center Infrastructure Management (DCIM) and an advocate for Universal Intelligent Infrastructure Management (UIIM). His career is fueled by a passion for creating accessible, impactful technology solutions in the data center sector.

Holding a degree in Industrial Electronics from the Universidad Carlos III de Madrid, Antonio has honed his expertise in developing and promoting effective DCIM tools. His pivotal role at RiT Tech involves enhancing XpedITe, ensuring it aligns with customer needs in both European and US markets. This task reflects his dedication to evolving DCIM solutions in response to real-world demands.

Antonio’s approach is grounded in practicality and innovation, aiming to make complex technology user-friendly and efficient. His work not only advances XpedITe but also contributes significantly to the broader conversation on intelligent data center management.

Antonio brings extensive knowledge and experience, offering insights into the future of data centers, shaped by intelligent infrastructure and customer-centric design.

Susan Anderton

Marketing Lead & Editor, RiT Tech

Susan Anderton brings a wealth of knowledge and understanding to writing for the data center industry. Her 30 years of experience as a brand consultant, writer, and marketer result in an excellent understanding of brand positioning and persuasive writing for various industries. For over five years, Susan has been gaining an in-depth understanding of the sector, specifically in the DCIM arena, construction, and professional services.

As RiT Tech’s Marketing Lead, Susan has been instrumental in evolving marketing strategies to meet the distinct challenges of DCIM. She is renowned for her ability to clearly communicate complex data center technologies and trends.

Companies that attended June 23 event

- JLL Data Centre Solutions

- Morgan Stanley

- Korgi Consulting

- RED Engineering

- Durata

- Hoare Lea

- Kharon-IT Ltd

- Arcadis

- HSSupport

- Uptime Institute

- Morgan Stanley

- JLL

- Cadence

- Rahi Systems

- Ri Conx

- Goldman Sachs

- CBRE

- Subzero

- Posetiv Cloud Ltd

- State Street Bank

- Sudlows

- KeySource

- Virtus Data Centres

- Proximity Data Centres

- RiT Tech

- LSEG

- Blue Precision Technologies